Advanced driver assistance system for distraction and drowsiness detection using facial landmarks

DOI:

https://doi.org/10.54753/cedamaz.v13i1.1814Keywords:

Facial landmark, Drowsiness detection, Distraction detection, Computer visionAbstract

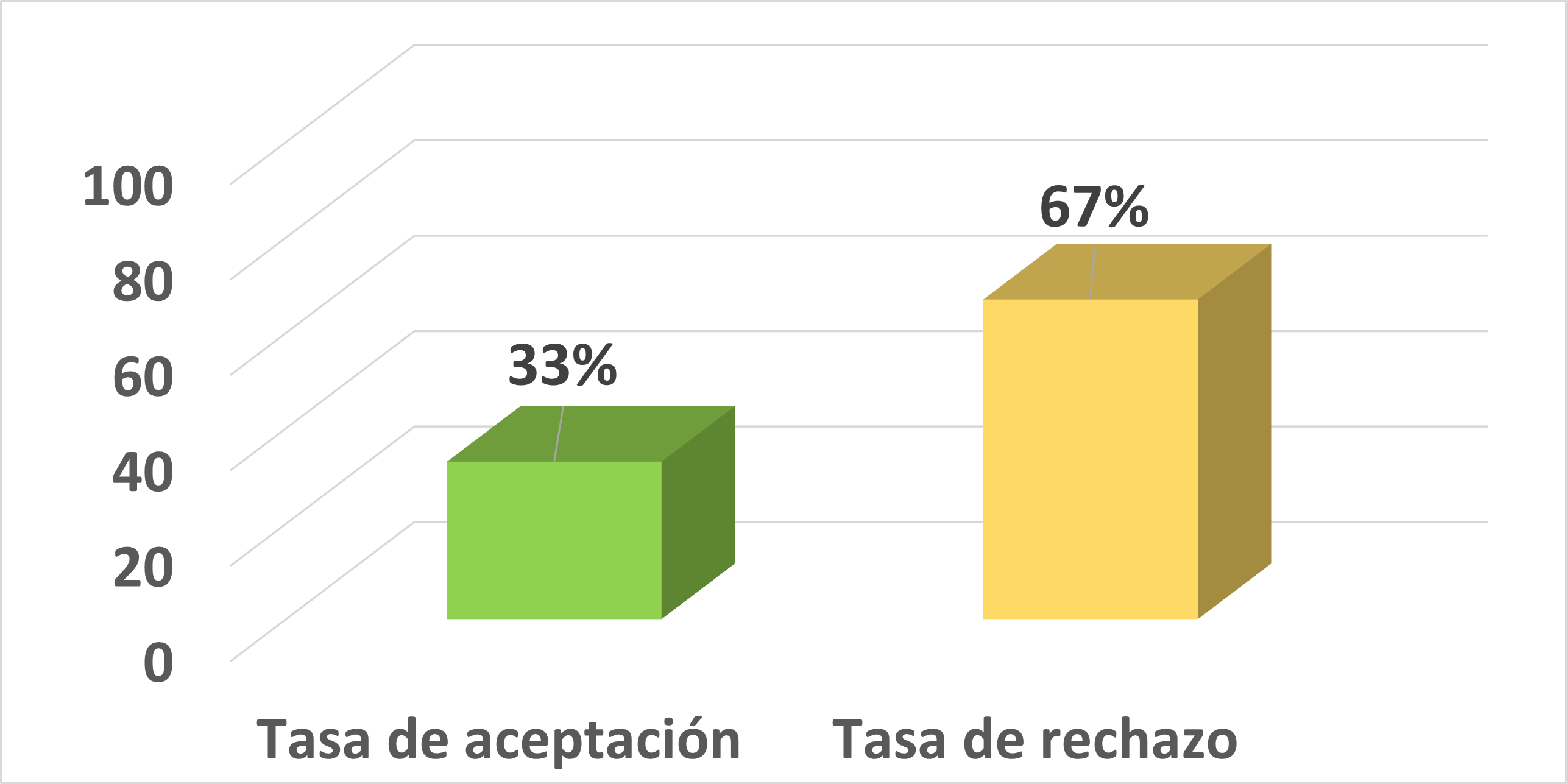

The following article presents the development of an advanced driver assistance system for detection of drowsiness and distraction in real time. This is a solution to traffic accidents using artificial vision. A discussion of the problem of traffic accidents in Ecuador in 2021 is initially offered, followed by a discussion of the different classes of distractions and drowsiness. In a next point, the most outstanding research works related to drowsiness and distraction are presented with the types of detection used. Then, the methodologies applied in this research are disclosed. For the literature review the Methodology for systematic literature review applied to engineering and education was employed and for the implementation of the project the SCRUM methodology. For the detection of drowsiness and distraction, it is carried out by facial reference points; Regarding drowsiness, the aspect ratio of the EAR eye is used and, as an innovative contribution in the detection of distraction, a difference in horizontal distances is incorporated.Metrics

References

Abouelnaga, Y., Eraqi, H. M., y Moustafa, M. N. (2017). Real-time distracted driver posture classification. arXivpreprint arXiv:1706.09498.

Agencia Nacional de Tránsito del Ecuador ANT, . (2023). Visor de siniestralidad – estadísticas – agencia nacional de tránsito del ecuador – ant. Descargado de https :// www .ant .gob .ec / visor -de-siniestralidad-estadisticas/

Ahmed, J., Li, J.-P., Khan, S. A., y Shaikh, R. A. (2015). Eye behaviour based drowsiness detection system. En 2015 12th international computer conference on wavelet active media technology and information processing (iccwamtip) (pp. 268–272).

Azman, A., Meng, Q., Edirisinghe, E., y Azman, H. (2012). Eye and mouth movements extraction for driver cognitive distraction detection. En 2012 ieee business, engineering & industrial applications colloquium (beiac) (pp. 220–225).

Cueva, L. D. S., y Cordero, J. (2020). Advanced driver assistance system for the drowsiness detection using facial landmarks. En 2020 15th iberian conference on information systems and technologies (cisti) (pp. 1–4).

Fernández, A., Usamentiaga, R., Carús, J. L., y Casado, R. (2016). Driver distraction using visual-based sensors and algorithms. Sensors, 16(11), 1805.

Group, I. B. U. (2023). i·bug - resources - facial point annotations. Descargado de https://ibug.doc.ic.ac .uk/resources/facial-point-annotations/

Hossain, M. Y., y George, F. P. (2018). Iot based real-time drowsy driving detection system for the prevention of road accidents. En 2018 international conference on intelligent informatics and biomedical sciences (iciibms) (Vol. 3, pp. 190–195).

Junaedi, S., y Akbar, H. (2018). Driver drowsiness detection based on face feature and perclos. En Journal of physics: Conference series (Vol. 1090, p. 012037).

Maralappanavar, S., Behera, R., y Mudenagudi, U. (2016). Driver’s distraction detection based on gaze estimation. En 2016 international conference on advances in computing, communications and informatics (icacci) (pp. 2489–2494).

Murphy-Chutorian, E., y Trivedi, M. M. (2010). Head pose estimation and augmented reality tracking: An integrated system and evaluation for monitoring driver awareness. IEEE Transactions on intelligent transportation systems, 11(2), 300–311.

Nambi, A. U., Bannur, S., Mehta, I., Kalra, H., Virmani, A., Padmanabhan, V. N., . . . Raman, B. (2018). Hams: Driver and driving monitoring using a smartphone. En Proceedings of the 24th annual international conference on mobile computing and networking (pp. 840–842).

Reddy, B., Kim, Y.-H., Yun, S., Seo, C., y Jang, J. (2017). Real-time driver drowsiness detection for embedded system using model compression of deep neural networks. En Proceedings of the ieee conference on computer vision and pattern recognition workshops (pp.

–128).

Regan, M. A., Lee, J. D., y Young, K. (2008). Driver distraction: Theory, effects, and mitigation. CRC press.

Rosales Mayor, E., y Rey De Castro Mujica, J. (2010). Somnolencia: Qué es, qué la causa y cómo se mide. Acta médica peruana, 27(2), 137–143.

Soukupova, T., y Cech, J. (2016). Eye blink detection using facial landmarks. En 21st computer vision winter workshop, rimske toplice, slovenia (p. 2).

Torres-Carrión, P. V., González-González, C. S., Aciar, S., y Rodríguez-Morales, G. (2018). Methodology for systematic literature review applied to engineering and education. En 2018 ieee global engineering education conference (educon) (pp. 1364–1373).

WHO, W. H. O. (2018). Global status report on road safety 2018. Descargado de https://www .who .int/publications/i/item/9789241565684

Zhao, M. (2015). Advanced driver assistant system, threats, requirements, security solutions. Intel Labs, 2–3.

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 CEDAMAZ

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Those authors who have publications with this journal, accept the following terms:

- After the scientific article is accepted for publication, the author agrees to transfer the rights of the first publication to the CEDAMAZ Journal, but the authors retain the copyright. The total or partial reproduction of the published texts is allowed as long as it is not for profit. When the total or partial reproduction of scientific articles accepted and published in the CEDAMAZ Journal is carried out, the complete source and the electronic address of the publication must be cited.

- Scientific articles accepted and published in the CEDAMAZ journal may be deposited by the authors in their entirety in any repository without commercial purposes.

- Authors should not distribute accepted scientific articles that have not yet been officially published by CEDAMAZ. Failure to comply with this rule will result in the rejection of the scientific article.

- The publication of your work will be simultaneously subject to the Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0)