Systematic literature review: characteristics and functioning of the BERT and SQuAD models

Keywords:

BERT, SQuAD, Covid, Answers to Questions, Conversational agentsAbstract

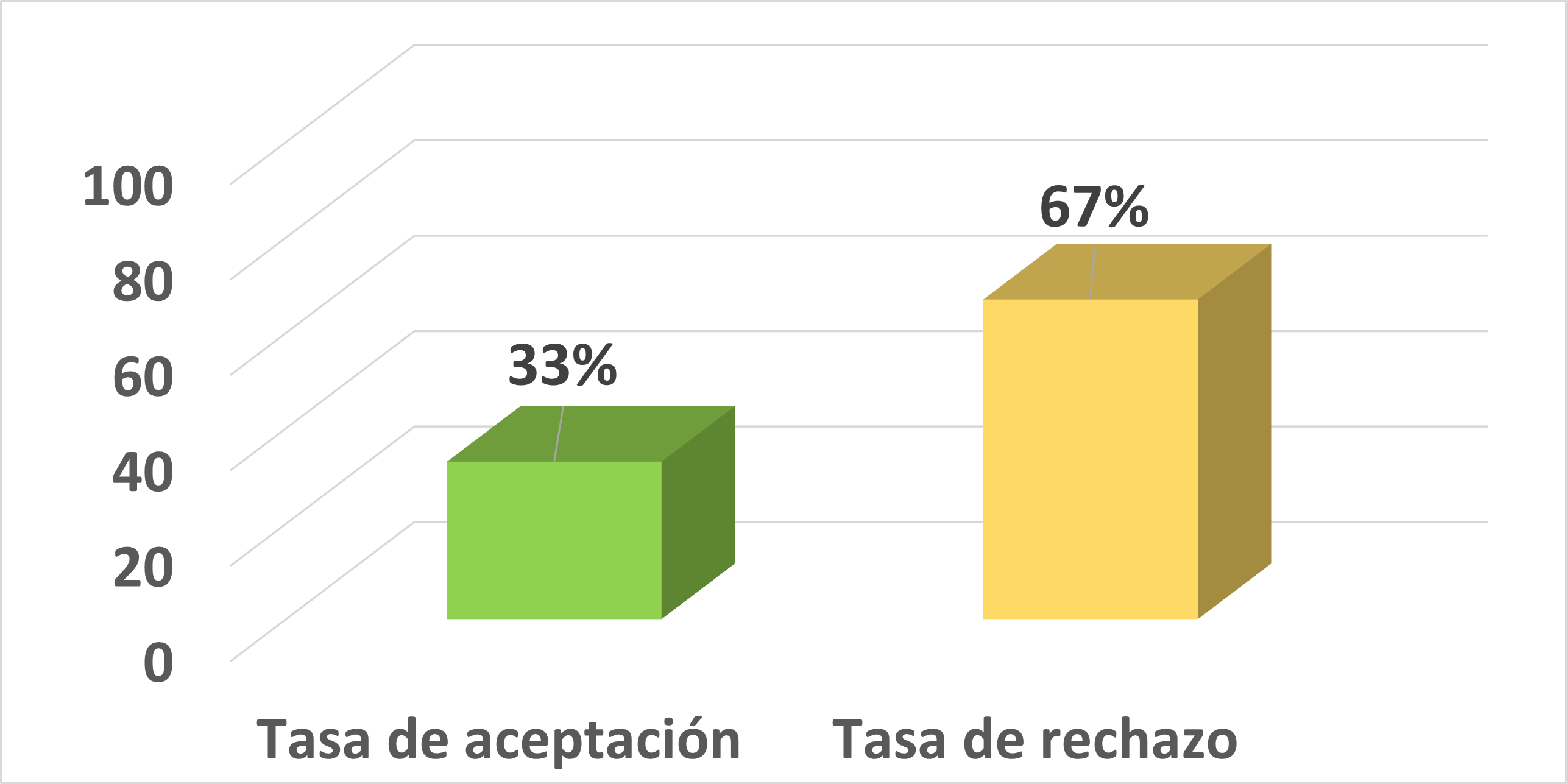

Currently, with the current pandemic, there have been collapses in the health system, which has caused human and economic losses in most cases, has caused the protection of the population and has limited access to health centers. This has caused deaths in the population due to lack of access to basic medical care, such as consultations on the main symptoms. This Systematic Literature Review (SLR) was undertaken to identify what features and optimal performance are necessary for the use of BERT and SQuAD in order to further develop a virtual agent focused on answering questions on common Covid-19 topics. The agent would provide greater coverage of Covid assistance issues to the population, since the health centers are not able to meet the needs of the population. The present RSL was based on the phases of Barbara Kitchenham’s methodology, the review was based on three research questions and defined the course of the review; obtaining PyTorch and TensorFlow as frameworks for software development, Python as programming language for its linkage in machine learning, the BERT BASE model used for low-resource hardware and SQuAD 2.0 for being more complete with respect to pairs of questions and reasonable answers.References

Ayoub, J., Yang, X. J., Zhou, F. (2021). Combat COVID-19 infodemic using explainable natural language processing models. Information Processing and Management, 58(4). https://doi.org/10.1016/j.ipm.2021.102569

Balagopalan, A., Eyre, B., Robin, J., Rudzicz, F., Novikova, J. (2021). Comparing Pre-trained and FeatureBased Models for Prediction of Alzheimer’s Disease Based on Speech. Frontiers in Aging Neuroscience, 13. https://doi.org/10.3389/fnagi.2021.635945

Bruke Mammo, Praveer Narwelkar, R. G. (2018). Towards Evaluating the Complexity of Sexual Assault Cases with Machine Learning. 1–25.

Chang, D., Hong, W. S., Taylor, R. A. (2020). Generating contextual embeddings for emergency department chief complaints. JAMIA Open, 3(2), 160–166. 85 CARACTERÍSTICAS Y FUNCIONAMIENTO RESPECTO A LOS MODELOS BERT Y SQUAD CARRIÓN https://doi.org/10.1093/jamiaopen/ooaa022

Chintalapudi, N., Battineni, G., Amenta, F. (2021). Sentimental analysis of COVID-19 tweets using deep learning models. Infectious Disease Reports, 13(2). https://doi.org/10.3390/IDR13020032

Devlin, J., Chang, M. W., Lee, K., Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. NAACL HLT 2019 - 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies - Proceedings of the Conference, 1, 4171–4186. https://github.com/tensorflow/tensor2tensor

El-Geish, M. (2020). Gestalt: a Stacking Ensemble for SQuAD2.0. http://arxiv.org/abs/2004.07067

Gao, Z., Feng, A., Song, X., Wu, X. (2019). Target-dependent sentiment classification with BERT. IEEE Access, 7, 154290–154299. https://doi.org/10.1109/ACCESS.2019.2946594

Hulburd, E. (2020). Exploring BERT Parameter Efficiency on the Stanford Question Answering Dataset v2.0. http://arxiv.org/abs/2002.10670

Kitchenham, B., Charters, S. (2007). Guidelines for performing Systematic Literature Reviews in Software Engineering.

Liu, H., Perl, Y., Geller, J. (2019). Transfer Learning from BERT to Support Insertion of New Concepts into SNOMED CT. AMIA. Annual Symposium Proceedings. AMIA Symposium, 2019, 1129–1138.

Maghraoui, K. El, Herger, L. M., Choudary, C., Tran, K., Deshane, T., Hanson, D. (2021). Performance Analysis of Deep Learning Workloads on a Composable System. 1, 1–10. http://arxiv.org/abs/2103.10911

Özçift, A., Akarsu, K., Yumuk, F., Söylemez, C. (2021). Advancing natural language processing (NLP) applications of morphologically rich languages with bidirectional encoder representations from transformers (BERT): an empirical case study for Turkish. Automatika. https://doi.org/10.1080/00051144.2021.1922150

Petticrew, M., Roberts, H. (2008). Systematic Reviews in the Social Sciences: A Practical Guide. In Systematic Reviews in the Social Sciences: A Practical Guide. Blackwell Publishing Ltd. https://doi.org/10.1002/9780470754887

Rajpurkar, P., Jia, R., Liang, P. (2018). Know what you don’t know: Unanswerable questions for SQuAD. ArXiv Preprint ArXiv:1806.03822.

Rajpurkar, P., Zhang, J., Lopyrev, K., Liang, P. (2016). SQuad: 100,000+ questions for machine comprehension of text. EMNLP 2016 - Conference on Empirical Methods in Natural Language Processing, Proceedings, 2383–2392. https://doi.org/10.18653/v1/d16-1264

Su, L., Guo, J., Fan, Y., Lan, Y., Cheng, X. Controlling Risk of Web Question Answering. SIGIR 2019 - Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval, 115–124. https://doi.org/10.1145/3331184.3331261

Su, M. H., Wu, C. H., Cheng, H. T. (2020). A TwoStage Transformer-Based Approach for Variable-Length Abstractive Summarization. IEEE/ACM Transactions on Audio Speech and Language Processing, 28, 2061–2072. https://doi.org/10.1109/TASLP.2020.3006731

Vinod, P., Safar, S., Mathew, D., Venugopal, P., Joly, L. M., George, J. (2020, June 1). Fine-tuning the BERTSUMEXT model for clinical report summarization. 2020 International Conference for Emerging Technology, INCET 2020. https://doi.org/10.1109/INCET49848.2020.9154087

Yang, X., Zhang, H., He, X., Bian, J., Wu, Y. (2020). Extracting family history of patients from clinical narratives: Exploring an end-to-end solution with deep learning models. JMIR Medical Informatics, 8(12). https://doi.org/10.2196/22982

Zadeh, A. H., Edo, I., Awad, O. M., Moshovos, A. (2020). GOBO: Quantizing attention-based nlp models for low latency and energy efficient inference. Proceedings of the Annual International Symposium on Microarchitecture, MICRO, 2020-Octob, 811–824. https://doi.org/10.1109/MICRO50266.2020.00071

Zeng, K., Pan, Z., Xu, Y., Qu, Y. (2020). An ensemble learning strategy for eligibility criteria text classification for clinical trial recruitment: Algorithm development and validation. JMIR Medical Informatics, 8(7). https://doi.org/10.2196/17832

Zhou, Y., Yang, Y., Liu, H., Liu, X., Savage, N. (2020). Deep Learning Based Fusion Approach for Hate Speech Detection. IEEE Access, 8, 128923–128929. https://doi.org/10.1109/ACCESS.2020.3009244

Published

How to Cite

Issue

Section

License

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

Those authors who have publications with this journal, accept the following terms:

- After the scientific article is accepted for publication, the author agrees to transfer the rights of the first publication to the CEDAMAZ Journal, but the authors retain the copyright. The total or partial reproduction of the published texts is allowed as long as it is not for profit. When the total or partial reproduction of scientific articles accepted and published in the CEDAMAZ Journal is carried out, the complete source and the electronic address of the publication must be cited.

- Scientific articles accepted and published in the CEDAMAZ journal may be deposited by the authors in their entirety in any repository without commercial purposes.

- Authors should not distribute accepted scientific articles that have not yet been officially published by CEDAMAZ. Failure to comply with this rule will result in the rejection of the scientific article.

- The publication of your work will be simultaneously subject to the Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0)